Advanced Micro Devices Inc. is taking on Nvidia Corp. with the launch of a new AI accelerator chip, announced at its Data Center & AI Technology Premiere event.

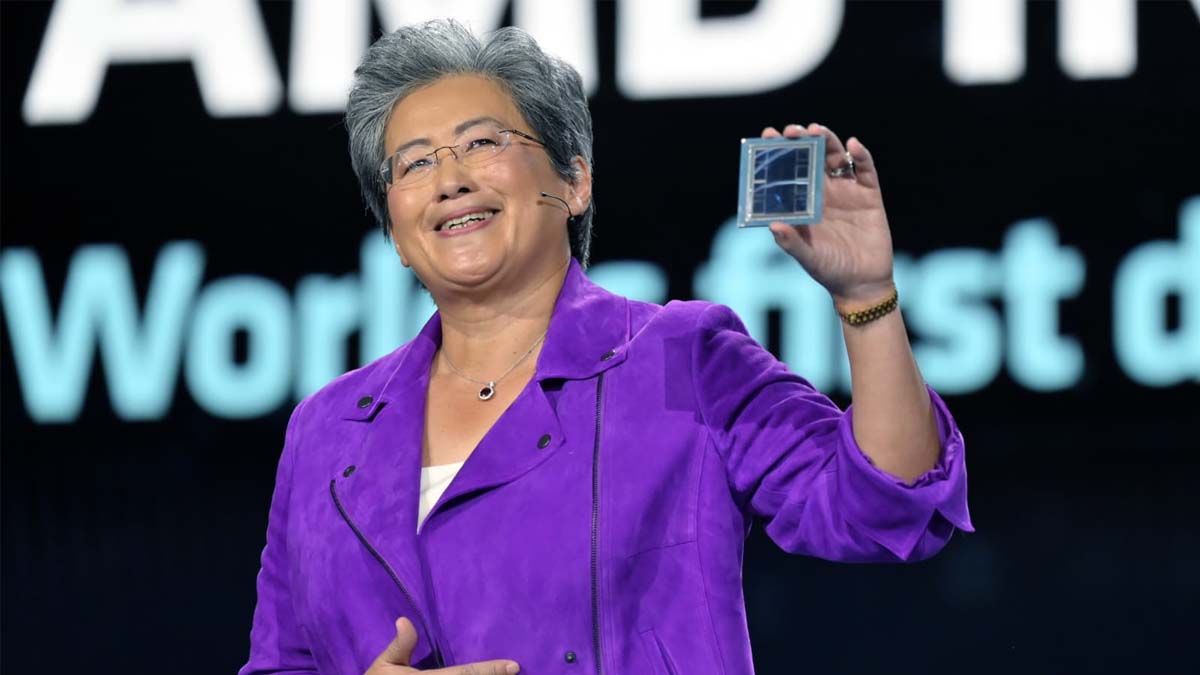

During a keynote speech, AMD CEO Lisa Su announced the company’s new Instinct MI300X accelerator, which is targeted specifically at generative AI workloads. Generative AI is the technology that underpins, for example, OpenAI’s chatbot ChatGPT or text-to-image diffusion model — Stable Diffusion.

She said customers will be able to use the MI300X to run generative AI models with up to 80 billion parameters. Customers will be able to sample the chip in the third quarter, with production set to ramp up before the end of the year.

AMD has said it sees a massive opportunity in an AI space that has been swept up by the hype around ChatGPT. “We think about the data center AI accelerator (market) growing from something like $30 billion this year, at over 50% compound annual growth rate, to over $150 billion in 2027,” Su said.

At present, Nvidia totally dominates the AI computing industry, with a market share of about 85%. The excitement around AI has driven massive gains in Nvidia’s stock, and at the end of may it became the first chipmaker to reach a $1 trillion market capitalization.

But the hyperscale cloud AI accelerator market is likely to be worth $125 billion in the next five years and it’s there that AMD can make its impact felt, because cloud hyperscalers are too reliant on Nvidia and are uncomfortable with the lack of a broad supply chain.

“Long-term, the cloud players can either create their own chips, continue to sole-source from Nvidia, or work with a second player like AMD,” Patrick Moorhead of Moor Insights & Strategy said. “I believe AMD, if it passes muster with its third-quarter sampling, could pick up incremental AI business between the fourth quarter of 2023 and first quarter of 2024. In addition, AI framework providers like PyTorch and foundation model providers like Hugging Face want more options to enhance their innovation too.”

Comments